Generative AI, GenAI, is quite probably one of the most impactful innovations of our generation. You see it pop up everywhere: it was one the hot topics at the recent AWS summit in Amsterdam, Nvidia’s shares are going through the roof and then some just to provide the chips needed for AI, every self-respecting software solution has included it (Mendix included it of course with the introduction of Mendix Chat), probably even the small bakery on the corner uses it! And of course there are some great use cases. Who hasn’t used it to summarize some text or get action notes from a meeting? You can even use it to set up a business! Company name? Plenty of available AI tools. The same goes if you need a logo. Why would you write your business plan yourself? Feed ChatGPT the relevant info and you are set. A new company set up in under 5 minutes. What is not to love, right?

Potential risks of using GenAI for Learning purposes

Well, as with pretty much everything, there may also be some downsides. I think there is especially a certain tension between the use of GenAI and learning or studying. Or even, I believe AI tools can become a curse when people stop thinking for themselves and solely rely on them. But before going any further on this, let’s ask ChatGPT how it feels about this.

Question: What are the risks of using ChatGPT or similar tools for study purposes?

Answer: Using ChatGPT or similar AI tools for learning purposes comes with several potential risks:

- Accuracy and Reliability: While AI models like ChatGPT strive for accuracy, they are not infallible. Responses may contain errors, inaccuracies, or outdated information. It’s essential to cross-verify information obtained from AI with reliable sources.

- Bias: AI models can inadvertently reflect the biases present in the data used to train them. This can lead to biased or skewed information being presented. Users should be aware of this potential bias and critically evaluate the information provided.

- Overreliance: Relying too heavily on AI tools for study purposes can hinder critical thinking skills and independent learning. It’s important to balance the use of AI with traditional study methods and personal research.

- Privacy Concerns: Interacting with AI models may involve sharing personal or sensitive information. Users should be cautious about the data they disclose and ensure they are using reputable AI platforms with strong privacy protections.

- Limited Understanding: AI models lack true understanding and consciousness. They can only generate responses based on patterns in the data they were trained on. As a result, they may struggle with nuanced or complex topics and cannot provide the depth of understanding that a human tutor or instructor might offer.

- Ethical Considerations: There are ethical implications to consider when using AI tools, such as the potential for misuse or the impact on human labor (e.g., displacing human tutors or educators). Users should consider the broader ethical implications of relying on AI for study purposes.

To mitigate these risks, users should approach the use of AI tools for study purposes with critical thinking, skepticism, and awareness of their limitations. It’s essential to supplement AI-generated information with human expertise and traditional study methods to ensure a well-rounded learning experience.

Overreliance and limited understanding

First, let’s focus on two topics in this list that are tightly connected: Overreliance and limited understanding (#3 & 5). Both are major risks when using AI tools. Especially if you are new to a certain field. Let’s take someone who is just starting as a Mendix developer. When an unfamiliar situation presents itself, for example, a user story requires the implementation of a feature you are unfamiliar with, there are a couple of ways to deal with the situation. You could try to solve it yourself by going through learnings, documentation, and experimentation or immediately ask for the solution. Granted, the last one is probably the fastest. But is it also the best? As a Mendix certified trainer, I don’t think so. Of course, there have been moments where I begged for someone to just give me the answer. I remember vividly going to my dad to ask him the solution to a math problem and getting frustrated when he wouldn’t give it to me, instead asking me counter questions and letting me figure it out through research and experimentation. Was it frustrating? Yes, no doubt about it.

At this point, I would like to take a moment and say to everyone I ever trained: I feel you… I know what you went through with me. But I will not say sorry. Because, however much frustrated I was not getting the solution when I asked for it, it taught me an important skill. It taught me how to figure something out and really understand what I was doing, understand how something works. And that is, I believe, a vital skill. It is a skill that is hard-won and earned. If my dad would have given me the solution when I asked for it, I would have known the answer to that specific question. But when I would be presented with a similar but slightly different problem, would I have known how to solve it? No, because the trick I learned did not work and I would have been back at square one. Now substitute my father with AI tools and the story is the same.

It is also happening the other way around. People asking questions on forums to get help on a specific issue and others ‘helping’ them with an answer generated by an AI tool. Quite often these answers are very generic and may not help answering the question at all. Over relying on AI tools will lead to limited understanding of the underlying issues, which in turn will lead to ever more reliance on AI tools.

Conclusion

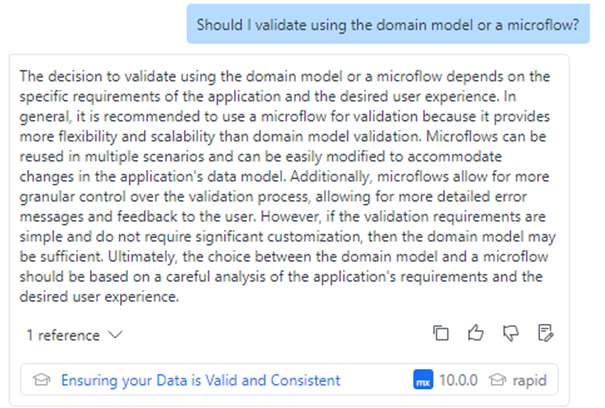

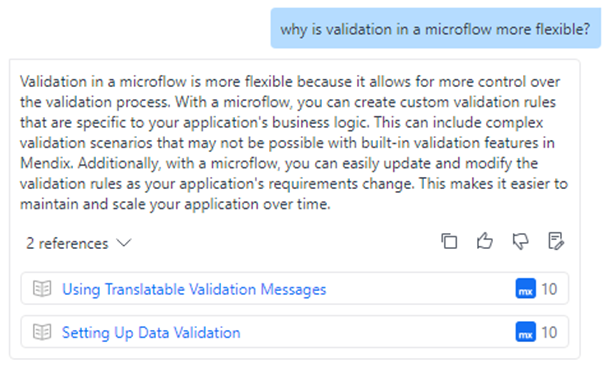

So, what does this mean? Should you as a (starting) Mendix developer use any AI tools at all? Is ChatGPT always wrong and should you avoid using it? No, of course not. These tools are wonderful and can be enormously helpful. ‘Helpful’ being the key word here. Developers shouldn’t solely rely on tools like ChatGPT. As ChatGPT responds to our query: a developer should always do the groundwork themself. Really learn and know what you are doing and why you are doing it. Not just what (which is what a tool like ChatGPT will give you). One of the strengths of GenAI, next to collecting a lot of information very fast, is that you can have a conversation with it. You can continue from your initial question. For example, if you get an answer and you’re not quite sure why it’s giving this particular answer; don’t follow blindly, just ask. “Hi ChatGPT, why is this the best solution to my problem?” Or ask it to explain something is a specific way.

Mendix has been including a variety of AI tools into Studio Pro, next to Mendix Chat. The most popular of which is undoubtedly the possibility to automatically create a validation microflows. Same as with ChatGPT or Mendix Chat it saves the developer tons of time having these microflows auto created instead of having to do everything manually.

But also here, it is important to know why this tool creates the validation microflow as it does. Why does it use the “sharktooth pattern”? Things like this are important to really understand. If you need to create a far more complex validation with interdependencies, the auto validation generator may not yield the best results in sense of readability and maintainability. If a developer is dependent on such a tool, they may not be able to realize this. Potentially resulting in bad application. However, having really learned the basics and understanding the why’s, the chance of something like that happening will be far smaller.

There are many more examples that can be discussed, but all in all, I think AI tools are neither a blessing nor a curse for training. If it is used “the right way” they can definitely benefit and speed up the learning process. And, for example, help developers find more information in a much faster way than they could themselves. But – and as discussed – this is a big but… if you just use it to get a “how-to” which you’re following blindly without knowing what you are actually doing and why or simply because “it says so”, then it can definitely be a curse.

“…there would be great value in Mendix developers learning how to integrate GenAI into their applications, using it to deliver more business value to their customers.”

I think the key to this discussion should be twofold. Firstly, when you are starting with something that is new to you, make sure you put in the hours, practice, and discuss your outcomes. Dig into how you can do something and why option A may be the better solution for scenario X.

And secondly, would be to learn more about GenAI. Understanding better how it works will also help (starting) developers to navigate its pitfalls better. Developments in the field of GenAI are happening at lightning speed. This makes it difficult to predict what the future will bring. Regardless of this ‘uncertainty’, there is great value in Mendix developers learning how to integrate GenAI into their applications, using it to deliver more business value to their customers. Colleague and former AWS product manager at Mendix, Freek Brinkhuis, will dive into this topic soon. Keep an eye out for his blog!

Are you curious to learn more about how GenAI can help your business? Or do you want to know more about the Learning & Development opportunities we provide? Reach out to us at labs@theorangeforce.com.

About the Author

Yves Rocourt is the Learning & Development Manager and a Senior Consultant at The Orange Force. Previously he led the Mendix Academy and the TimeSeries University. Mendix certifications? He’s got them all: he is an Expert-level Mendix developer and an Advanced-level Mendix trainer. He is sort of a history buff, having worked as a museum curator before starting with Mendix. Questions about history or Mendix? Join one of his trainings, you can ask him about both!